Creating a Simple but Effective Outbound "Firewall" using Vanilla Docker-Compose

By Forest Johnson On

Forest Johnson On

While I was updating my server infrastructure, I came upon a new option in the docker-compose.yml version 3 schema: "internal" bridged networks.

internal

By default, Docker also connects a bridge network to it to provide external connectivity. If you want to create an externally isolated overlay network, you can set this option to true.

This option seems rather unassuming, perhaps easy to miss, but I think it's actually quite powerful & potentially useful for securing applications which are running in Docker.

But before I can talk about internal networks, some background:

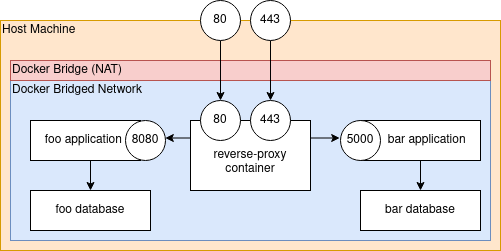

We can use reverse proxy servers like nginx and Caddy to control which containers can be connected to, who can connect, on what ports they can connect, etc. I believe this is a fairly widespread practice among docker users.

The docker default network driver, the "bridged" network doesn't allow connections from the host machine (or the outside world) directly into containers. This is similar to the way most home routers don't allow inbound connections from the internet into your home computer & other devices on the network: it uses NAT (Network Address Translation).

Typically, docker users employ port mapping to "publish" listening ports from the containers to the host machine. This works exactly like configuring "port forwarding" on a home router.

So a typical use case would look like this:

The reverse-proxy server listens on the public HTTP ports 80 and 443, and publishes those ports to the host. Then the reverse proxy is configured to forward valid HTTP connections to the foo app and the bar app. If a process running on the host or a device on the network tries to connect to the foo app or the bar app directly (on port 8080 or port 5000) they won't be able to, because the foo app and bar app containers are not routable from that location on the network. The docker bridge NAT can't and won't allow that kind of connection; additional steps would be necessary to connect.

This is great for inbound connections, but it does nothing to control outbound connections. Just like the NAT in your home network, the docker bridge is designed to allow outbound connections, not restrict them. It's possible to configure docker to use a different network driver to control outbound connections, like a 3rd party one or one that you wrote yourself, but in my experience, this gets complicated fast and has a lot of potential to fail catastrophically. I would much rather find a way to control outbound connections using the vanilla docker and docker-compose features. So that's what I set out to do, and that's where the internal networks come into play.

On an internal network, you can't connect to the internet at all. You also can't connect to the host. That's great for security, not so great for functionality. Ideally, we could allow some connections to the outside world while denying others. As far as I know, docker doesn't have any built-in mechanism for this, but I didn't let that stop me.

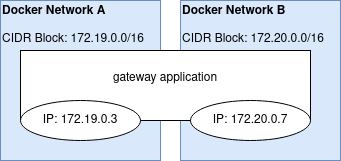

The trick is, a docker container can be attached to more than one network. This allows us to create "gateway" containers that control how traffic flows between two or more networks. When we do this, the container gets allocated a separate IP address on each network.

(This would be similar to an imaginary situation where your computer had multiple ethernet ports and you had plugged it into multiple different routers on different networks)

When the program running on the container tries to open a connection, it asks the OS, who knows which IP address to send that connect packet from (which "ethernet port" to send the request out of), because it can consult the advertised routes for each network to find out.

So if I wanted to implement an outbound firewall in vanilla docker-compose, I would have to use a gateway container to implement my "allowed servers" whitelist, aka my "firewall".

It's actually not a firewall, it's technically a forward proxy server. But when we combine:

- An

internaldocker network - A normal

bridgeddocker network - A proxy server that spans both networks

We get something that vaguely resembles an outbound firewall. Of course, there are lots of fiddly bits to figure out before this will actually work in the general case. In typical technology fashion, each problem is caused by the solution to the last 😃

Problem 1: Domain Names and TLS Certificate Validation

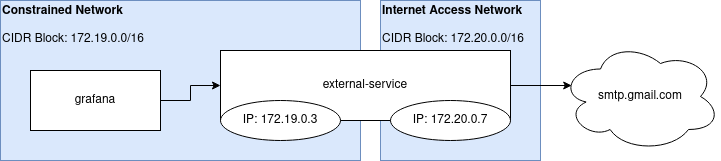

In a naive implementation, we would write something like this.

docker-compose.yml

version: "3.3"

networks:

internet-access:

driver: bridge

constrained:

driver: bridge

internal: true

services:

external-service:

image: sequentialread/external-service:0.0.12

networks:

- constrained

- internet-access

environment:

SERVICE_0_LISTEN: ':465'

SERVICE_0_DIAL: 'smtp.gmail.com:465'

grafana:

image: grafana/grafana:7.4.3

networks:

- constrained

environment:

GF_SMTP_HOST: 'external-service:465'

GF_SMTP_PASSWORD: ${GMAIL_PASSWORD}

GF_SMTP_USER: my-kool-alertz@gmail.com

The problem here: when grafana connects to external-service (and indirectly, connects to smtp.gmail.com) over SMTP, we really want it to use STARTTLS inside that SMTP session, because we are sending our gmail password over the wire to log in. But the TLS part of the connection will fail since as far as grafana can tell, it's connecting to a server named external-service, meanwhile the certificate it was presented with is only valid for smtp.gmail.com. It will get a "bad certificate domain" TLS error

So we have to somehow "trick" grafana into thinking it's connecting to smtp.gmail.com. Which brings us to...

Problem 2: extra_hosts doesn't support container names and can't be easily templated

There is a docker-compose service property called extra_hosts which does exactly what we want. It inserts entries into the /etc/hosts file inside the container. So, we can simply create an extra_hosts entry for smtp.gmail.com pointing to the external-service container.

So, the naive user might write this:

...

grafana:

image: grafana/grafana:7.4.3

networks:

- constrained

extra_hosts:

- smtp.gmail.com:external-service

environment:

GF_SMTP_HOST: 'smtp.gmail.com:465'

...

However, this won't work. We can't simply pass in the domain name of the container (or any other domain name) here, because it's a hosts file entry, it must be an IP address. Moreover, as far a I an tell, docker-compose doesn't come with any feature which would allow us to template the IP address of a container into the config of another. It's almost as if we need to know what the IP address of the container will be ahead of time...

Normally this would be impossible because by default, docker containers get thier IP addresses via DHCP, so it's fairly unpredictable. However, there are IPAM (IP Address Management) options in the docker-compose schema which allow us to set CIDR (Classless Inter-Domain Routing) blocks for network subnets and set static IP addresses for containers.

So, we can leverage those features to achieve what we want. I decided to store the CIDR blocks and static IPs in the .env file to make things cleaner and easier to follow:

.env

CONSTRAINED_NETWORK_CIDR=172.19.0.0/16

CONSTRAINED_NETWORK_EXTERNAL_SERVICE_IPV4=172.19.0.120

docker-compose.yml

version: "3.3"

networks:

internet-access:

driver: bridge

constrained:

driver: bridge

internal: true

ipam:

driver: default

config:

- subnet: "${CONSTRAINED_NETWORK_CIDR}"

services:

external-service:

image: sequentialread/external-service:0.0.12

networks:

constrained:

ipv4_address: ${CONSTRAINED_NETWORK_EXTERNAL_SERVICE_IPV4}

internet-access:

environment:

SERVICE_0_LISTEN: ':465'

SERVICE_0_DIAL: 'smtp.gmail.com:465'

grafana:

image: grafana/grafana:7.4.3

networks:

- constrained

extra_hosts:

- smtp.gmail.com:${CONSTRAINED_NETWORK_EXTERNAL_SERVICE_IPV4}

environment:

GF_SMTP_HOST: 'smtp.gmail.com:465'

GF_SMTP_PASSWORD: ${GMAIL_PASSWORD}

GF_SMTP_USER: my-kool-alertz@gmail.com

⚠️ NOTE that when switching an existing compose file to use IPAM, docker-compose may not correctly realize that it has to re-create networks. You might have to delete the networks manually or run

docker compose down && docker-compose up -d

For some containers, this may be enough, and it may actually work. Now the grafana container is connecting to what it thinks is smtp.gmail.com, so TLS should go off without a hitch. But in my case, it didn't work on my grafana container because,

Problem 3: some docker containers completely ignore the /etc/hosts file

The many different linux operating systems, application programing tools, paradigms, etc, don't always play nice together. There are probably over 100 ways to configure DNS on linux which have been implemented throughout history, and many of them are still in use today. To make matters worse, the operating system and the application often have to work together, come to some sort of understanding with each-other, for DNS resolution to work the way we expect. I can't even begin to offer a comprehensive guide to configuring DNS on linux, but I can give one example of a problem and solution which has been perennial throughout my years with docker.

I was clued in that this was the problem when I asked Grafana to send a email alert and saw this in the logs:

t=2021-03-13T03:17:52+0000 lvl=info msg="Sending alert notification to" logger=alerting.notifier.email addresses=[forest.n.johnson@gmail.com] singleEmail=false

t=2021-03-13T03:18:02+0000 lvl=eror msg="Failed to send alert notification email" logger=alerting.notifier.email error="Failed to send notification to email addresses: forest.n.johnson@gmail.com: dial tcp: i/o timeout"

t=2021-03-13T03:18:02+0000 lvl=eror msg="Failed to send alert notifications" logger=context userId=1 orgId=1 uname=admin error="Failed to send notification to email addresses: forest.n.johnson@gmail.com: dial tcp: i/o timeout" remote_addr=192.168.0.1

The key here was on the last line remote_addr=192.168.0.1. Somehow the stupid thing had resolved the common LAN gateway IP 192.168.0.1 for smtp.gmail.com. This was obviously the wrong IP, indicating that the container was not using the hosts file, and it also couldn't talk to any DNS server. This was actually kind of fun to see & encouraging, that my firewall was working. The poor thing couldn't even look up domain names. Nice try, DNS exfiltration!

If the application running inside your docker container is ignoring entries in your hosts file, it may because the /etc/nsswitch.conf file is misconfigured or missing.

So I decided to try to simply configure my grafana container to write its own /etc/nsswitch.conf file on startup. First, I had to figure out what the grafana container was doing by default when it started up, because I would need to mimic that behavior myself in the next step. So I asked docker to inspect the container, and then I searched for two properties, entrypoint and cmd. I'm using grep here with the -i (case insensitive search) and -A (show n lines After match) flags here because the output of docker inspect is quite long and it's a pain to scroll through.

$ docker inspect sequentialread_grafana_1 | grep -i -A 3 "entrypoint"

"Entrypoint": [

"/run.sh"

],

$ docker inspect sequentialread_grafana_1 | grep -i -A 3 "cmd"

"Cmd": null,

"Image": "grafana/grafana:7.4.3",

"Volumes": null,

So now we know that the docker entrypoint is the script /run.sh, and the command is null, meaning no extra arguments are passed to /run.sh. Simple enough 😃. All I have to do now:

- Set the entrypoint to a shell so I can pass it a script to run when the container starts.

- Write a script which configures

/etc/nsswitch.confand then calls/run.sh.

grafana:

image: grafana/grafana:7.4.3

entrypoint: /bin/sh

command: [

"-c",

"echo 'hosts: files dns' > /etc/nsswitch.conf && /run.sh"

]

...

This looked good to me, so I gave it a whirl, leading to...

Problem 4: some docker containers don't run as root by default, meaning /etc/nsswitch.conf won't be writable

The grafana docker container is configured to run as the "grafana" user, who is a low-privilege user & not allowed to write system configuration files like /etc/nsswitch.conf. So not only did I have to monkey-patch the startup script of the container, I had to monkey-patch the user as well. This is done by overriding the user for the container to 0, and then wrapping the call to /run.sh with su to make sure we run /run.sh as the grafana user. (the root user's user id is 0)

grafana:

image: grafana/grafana:7.4.3

user: '0'

entrypoint: /bin/sh

command: [

"-c",

"echo 'hosts: files dns' > /etc/nsswitch.conf && su -s '/bin/sh' -c '/run.sh' grafana"

]

...

After this, it finally worked and I was able to recieve an alert email!

If you wish, you can check out my full docker-compose as well as the source code of the external-service TCP proxy application.

Comments